Imagen from Google AI

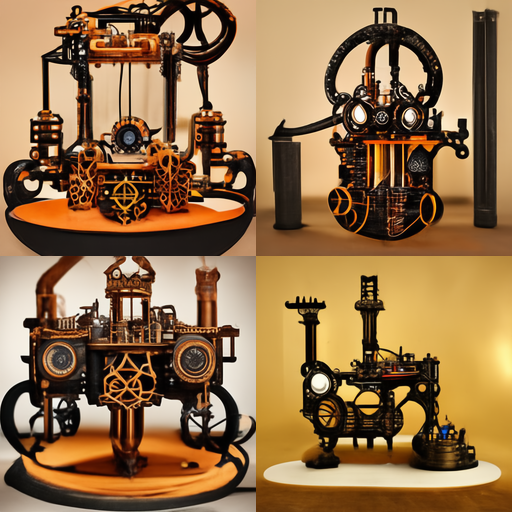

*It’s another text-to-image generator, and it’s pretty good. “Steampunk 3Dprinters”

https://imagen.research.google/

Imagen research highlights

- We show that large pretrained frozen text encoders are very effective for the text-to-image task.

- We show that scaling the pretrained text encoder size is more important than scaling the diffusion model size.

- We introduce a new thresholding diffusion sampler, which enables the use of very large classifier-free guidance weights.

- We introduce a new Efficient U-Net architecture, which is more compute efficient, more memory efficient, and converges faster.

- On COCO, we achieve a new state-of-the-art COCO FID of 7.27; and human raters find Imagen samples to be on-par with reference images in terms of image-text alignment.